Proof Measurement and Estimation

Proof Measurement and Estimation is an activity of the Trustworthy Systems group.

|

|

Latest news:

- June 2015 - Mark Staples has been invited to give a talk at ASWEC 2015 about the ICSE 2015 paper.

- May 2015 - Dan Matichuk presented Empirical Study Towards a Leading Indication for Cost of Formal Software Verification in the main technical track at ICSE 2015.

- January 2015 - An Empirical Research Agenda for Understanding Formal Methods Productivity was published in Information and Software Technology.

-

Aims: understand how to measure and estimate large-scale formal verification projects by:

- investigating measures for the size and complexity of formal verification artefacts (e.g. formal specifications, invariants, and proofs),

- proposing models and approaches to estimate the size and effort required to complete formal verification projects,

- empirically evaluating all of the above using NICTA's past and present formal verification projects.

-

Milestones:

- Feb 2015:

Empirical analysis of leading indicators of proof size.

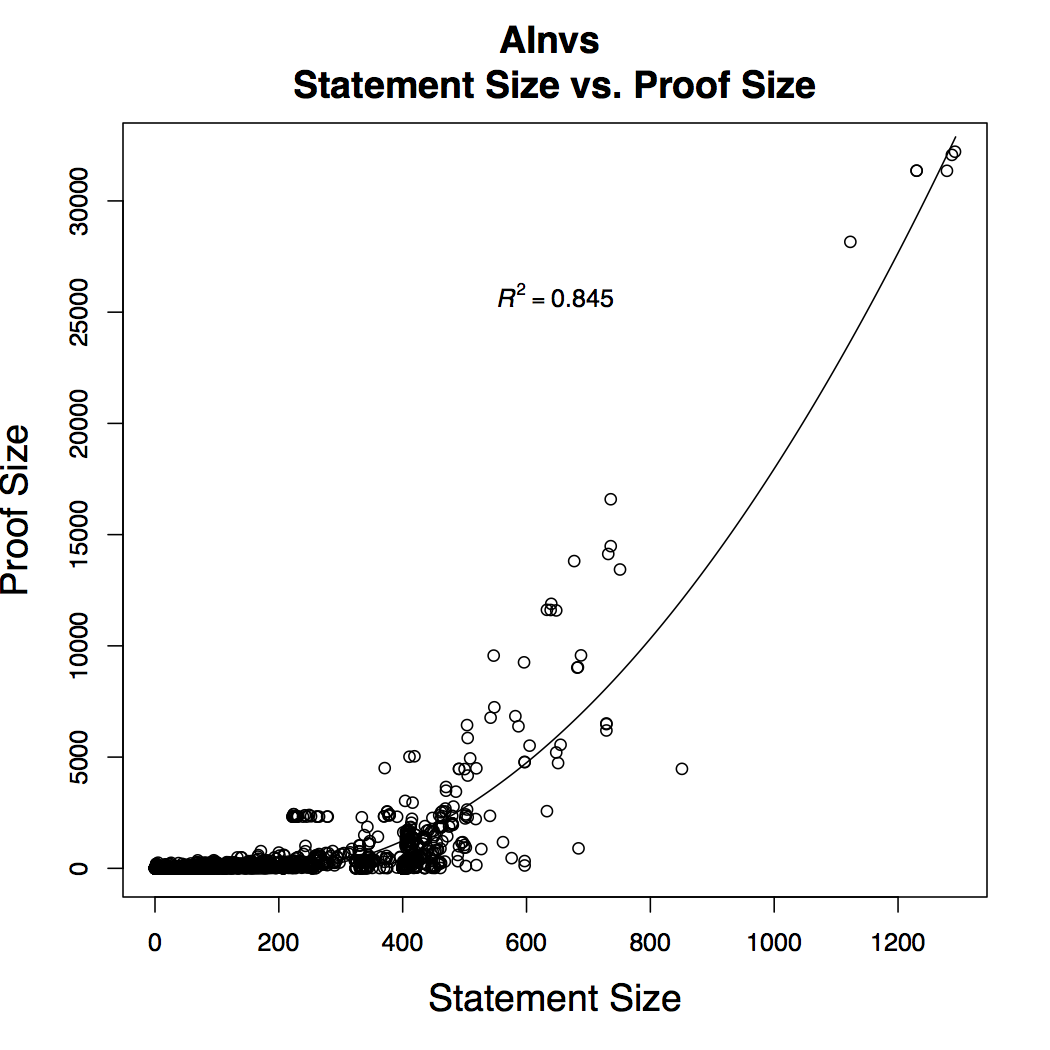

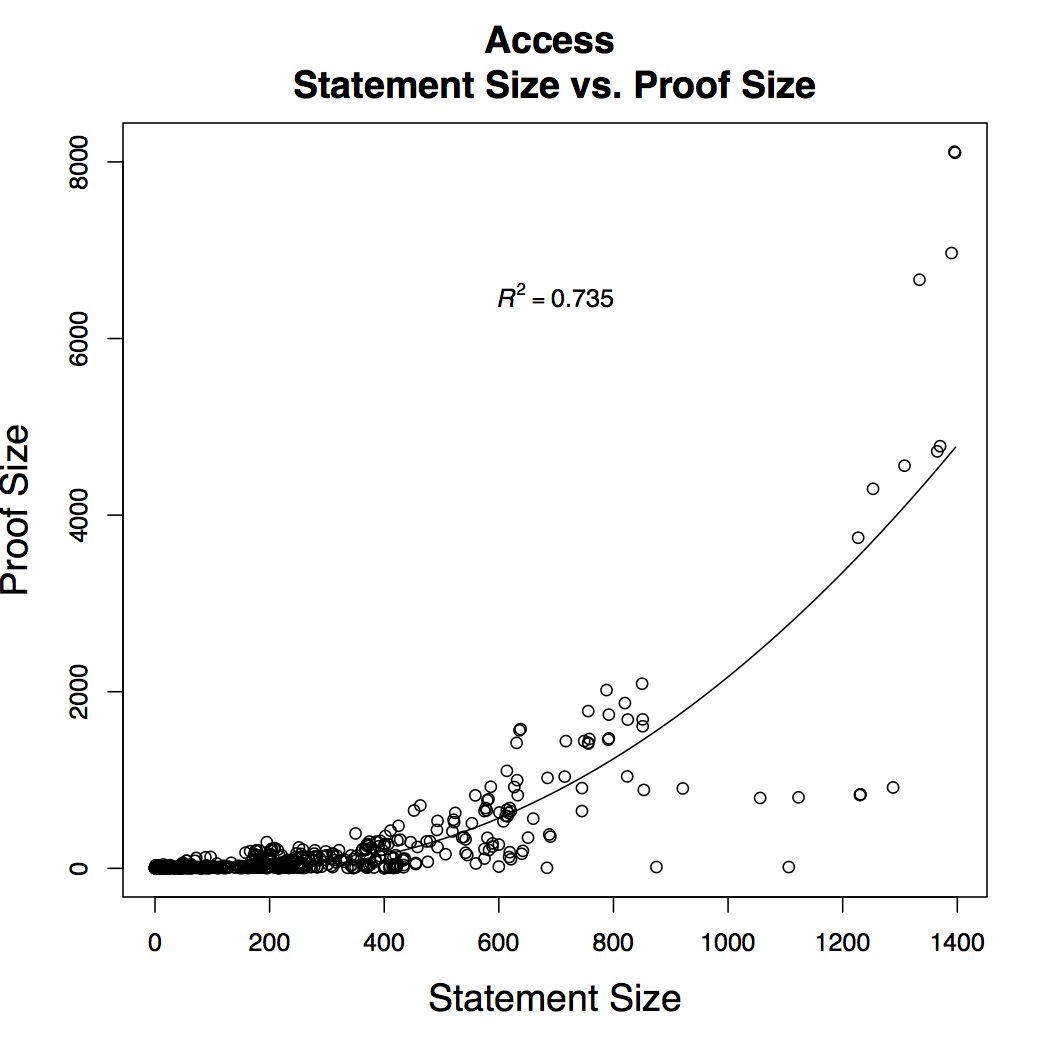

- Outcome: consistent quadratic relationship between size of formal statements and size of their proof, for 15,018 individual lemmas from seL4 verification and the Archive of Formal proofs.

- Paper: Empirical Study Towards a Leading Indicator for Cost of Formal Software Verification (ICSE'15).

- Jan 2015:

Research agenda for empirical study of productivity

in software projects using formal verification.

- Outcome: identification of more than thirty research questions, and associated metrics, in need of investigation.

- Paper: An Empirical Research Agenda for Understanding Formal Methods Productivity (IST'15).

- Sep 2014:

Analysis of relationship between proof size and effort.

- Outcome: strong linear relationship between effort and proof size for projects et for individuals, for 9 projects associated with L4.verified.

- Paper: Productivity for Proof Engineering (ESEM'14).

- May 2013:

Analysis of measures of code and formal specification.

- Outcome: strong relationship between specificatoin size and code size, whereas no significant relationship between Function Point and code size.

- Paper: Formal Specification Better than Function Points for Code Sizing (ICSE'13).

- Jun 2012:

Simulation model of L4.verified middle-out process.

- Outcome: first simulation model of a formal verification process; investigation of possible impacts of process decisions.

- Paper: Simulation Modeling of a Large-Scale Formal Verification Process (ICSSP'12).

- Jun 2012:

Analysis of large-scale formal verification projects from

a process point of view.

- Outcome: descriptive process model of L4.verified "middle-out" development process; identification of key success factors and comparison to literature.

- Paper: Large-Scale Formal Verification in Practice: A Process Perspective (ICSE'12).

- Feb 2015:

Empirical analysis of leading indicators of proof size.

-

Context:

In traditional software engineering, development teams start with a requirements specification, design the system, implement it in a programming language, and then create and run test cases to check whether the system is built as designed and as required. However, because computer systems are so complex, testing can never check all possible behaviors of the system.

Software engineering with formal methods is different. Teams start with a formal specification, which is a mathematical description of the requirements and/or design. Teams then use mathematical proof to show that the implemented system functions as designed and as required. This is called formal verification, and can provide an exhaustive check of all possible behaviors of the software. (Given some reasonable idealising assumptions about the underlying platform.)

The formal verification projects undertaken at NICTA create large machine-checked mathematical proofs, authored by teams of people. However, running these large formal verification projects is a challenge. Estimating the size and effort of these projects is a key management challenge, but almost nothing is known about how to do this well.

-

Technical research challenges:

- Are there better measures of the size of a formal verification proof than Lines of Proof?

- Given a formal specification and a correct implementation, how big will the verification proof be?

- As invariants are incrementally discovered during formal verification, what impact does that have on proof rework?

- How much proof rework is caused by finding and fixing implementation bugs during formal verification?

- After a formal verification project has started, how much longer will it take to finish?

-

Previous Events:

- May 2013 — Our paper, Formal Specifications Better Than Function Points for Code Sizing was presented in the New Ideas and Emerging Results track at ICSE 2013.

- March 2013 — Gerwin Klein presented at IFIP WG 1.9/2.15 meeting, on When are we done with OS verification?, including discussion of verification productivity and repeatability

- July 2012 — Mark Staples presented at a Dagstuhl seminar, on Finding and Responding to Failure in Large Formal Verifications, including discussion of proof rework

- May 2012 - A number of people participated in a joint Workshop at ISCAS in Beijing.

-

Publications:

Daniel Matichuk, Toby Murray, June Andronick, Ross Jeffery, Gerwin Klein and Mark Staples

Empirical study towards a leading indicator for cost of formal software verification

International Conference on Software Engineering, pp. 11, Firenze, Italy, February, 2015

Ross Jeffery, Mark Staples, June Andronick, Gerwin Klein and Toby Murray

An empirical research agenda for understanding formal methods productivity

Information and Software Technology, Volume 60, pp. 102–112, January, 2015

Mark Staples, Ross Jeffery, June Andronick, Toby Murray, Gerwin Klein and Rafal Kolanski

Productivity for proof engineering

Empirical Software Engineering and Measurement, pp. 15, Turin, Italy, September, 2014

Mark Staples, Rafal Kolanski, Gerwin Klein, Corey Lewis, June Andronick, Toby Murray, Ross Jeffery and Len Bass

Formal specifications better than function points for code sizing

International Conference on Software Engineering, pp. 1257–1260, San Francisco, USA, May, 2013

June Andronick, Ross Jeffery, Gerwin Klein, Rafal Kolanski, Mark Staples, He (Jason) Zhang and Liming Zhu

Large-scale formal verification in practice: A process perspective

International Conference on Software Engineering, pp. 1002–1011, Zurich, Switzerland, June, 2012

He (Jason) Zhang, Gerwin Klein, Mark Staples, June Andronick, Liming Zhu and Rafal Kolanski

Simulation modeling of a large scale formal verification process

International Conference on Software and Systems Process, pp. 3–12, Zurich, Switzerland, June, 2012

People

Past

|